High-Speed Vision

The Problem

When a robot has to immediately react to real-world events detected by a vision sensor, high-speed vision is required. This may be a visual servoing task, i.e., the vision sensor is part of the robot’s control loop, or a reaction to a sudden event, such as catching a thrown ball. A vision sensor should ideally respond within one robot control cycle in order to not limit the robot’s dynamic range. Current fast digital video cameras achieve frame rates of 100 Hz and beyond.

High speed vision tasks require high computational power and data throughput, which often exceed those available on mainstream computer platforms. Special-purpose systems lack flexibility at the cost of much design effort and long development time.

Methods

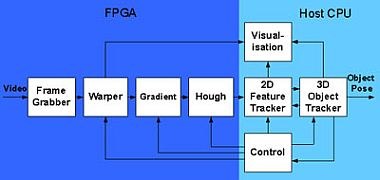

Programmable Logic Devices, such as FPGAs (Field Programmable Gate Arrays) are an enabling technology for high-speed vision tasks: FPGAs in close co-operation with general-purpose CPUs benefit from high speed, low latency, constant throughput, high integration, and low power consumption. To implement algorithms on an FPGA, they have to be mapped to a parallel, pipelined structure.

To validate the use of FPGAs for vision applications and create the necessary know-how at our institute, we implemented our model-based 6-DoF pose tracking algorithm [1] on a FPGA platform. For this test scenario, we chose a commercially available FPGA board. We separated the algorithm in a pre-processing part, to be implemented on the FPGA, and a high-level part, to be implemented on a host CPU, in our case a Linux Pentium III system. Three operators have to be implemented on the FPGA:

- an image rectification (warper),

- a Sobel gradient filter,

- and an optimized Hough transform for lines.

On the host CPU, the model-based tracking is performed, making use of the line representation computed by FPGA.

The warper operator for image rectification is based on a sub-pixel accurate coordinate transformation, using a bi-cubic distortion polynomial. We evaluated several implementations for the bi-cubic distortion model. A fixed-point arithmetic unit optimized for the given distortion coefficients was chosen.

For the gradient calculation, we implemented two parallel 7x7 neighborhood operators to realize vertical and horizontal Sobel gradient filters at pixel rate with minimal pipeline delay.

An optimized implementation of the Hough transform for lines uses the CORDIC (COordinate Rotation DIgital Computer) algorithm to implement the necessary trigonometric functions. The resulting Hough accumulator is transferred to the host at the incoming frame rate.

Results

FPGA-powered pre-processing stages: distorted input image, rectified image, gradient image, and angle of the gradient.

A more detailed description can be found in [2].

[1] Wunsch, P., Hirzinger, G.: Real-Time Visual Tracking of 3-D Objects with Dynamic Handling of Occlusion. Proc. IEEE Conf. on Robotics and Automation, Albuquerque, NM, 1997.

[2] Jörg, S., Langwald, J., Nickl, M.: FPGA based Real-Time Visual Servoing. Proc. ICPR – International Conference on Pattern Recognition, Cambridge, UK, CD, 2004.