Online Surface Reconstruction

The Problem

The development of range sensors allows for generating 3D models by contact-less measurement of the desired objects surface. These models can be used for virtual reality applications, like virtual museums or product presentations, but also for path planning and collision avoidance in the robotic field.

The acquisition of the models can be done manually or automatically. In the first case a user has to manually guide the sensor around the object (3D-Modeller)

An external tracking system is used, to get the 6DoF position of the sensor and to transform the local range data into a global frame. In the second case the range sensor is mounted onto an active manipulator, like a robot.

The generated 3D data is unfiltered and unorganized, i.e. the data can contain inaccurate sample points and outliers, due to bad measurement conditions, and the points are not arranged in any particular order relative to the sampled surface. Moreover, the data is a point set without information about connectivity between the points available.

For that reason an online data processing and model generation will greatly aid these systems. In the hand-guided scanner application the models can be displayed online and used as visual feedback for the user. In the automatic application the models are used for collision avoidance and adapting the robots trajectory (NBV). Autonomous C-Space Exploration and Object-Inspection

Methods

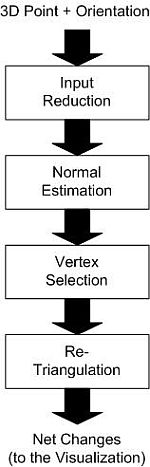

The goal of this reconstruction approach is to generate successively a single triangle mesh (which approximates the unknown, measured surface) by incrementally inserting the sensors data. In order to handle data of generic sensor types, the insertion is done point by point. This online approach can be described as a four step forward process chain.

In the following the four steps are explained.

1. Input Reduction

Multiple, overlapping sweeps with the scanner can generate a local point density higher than the accuracy of the sensor. This generates redundancies in the data, raising the calculation effort without improving the result. For this reason, the density of the measured pointcloud has to be limited. This is done by an initial point reduction at the input.

2. Estimation of Surface Normals

When a new point has passed the reduction step, the corresponding surface normal at this point is estimated. This is done by the least-square fitting of a tangent plane through a point neighbourhood of the new point, using the covariance matrix of this neighbourhood (The point neighbourhood of a specified point are all points with less than a given distance to this point). Because all points in the neighbourhood of the new point influence the neighbouring points, and vice versa, the normals of these have to be updated also.

3. Vertex Selection

A "vertex selection" step is needed, because the points are inserted randomly and incrementally. The neighbourhoods at the normal estimation step contain only a few points in the beginning, which results in poor estimation results. For this reason, new points should not be inserted in the model (triangle net) before the associated normal is estimated correctly. This also filters spikes or outliers, because they always have a small or no neighbourhood.

The average direction change of the estimated normals is used as criteria for a "good" point. Only points with low change are used for the model and passed to the next step.

4. Local Re-triangulation

Finally, the selected vertices are incrementally inserted in the model (triangle net). The net is realized as a set of vertices connected by edges. The insertion of a vertex modifies the net in the vicinity of this new vertex. This is done by adding and removing edges in the net.

Results

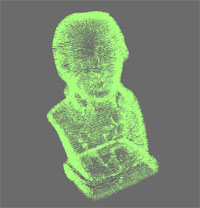

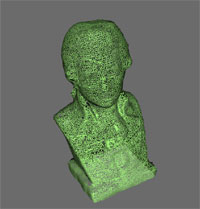

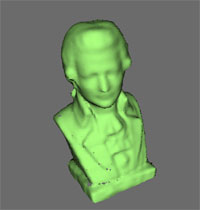

The system is used with the Multisensory 3D-Modeller and also with data of the Z+F Imager. The results show that the system works well, even with large datasets. The results with the Modeller presented in the following figures, show the reconstruction process. The models are already used for grasp planning.

Additionally, a result with a large dataset, measured by the Z+F Imager is presented

Future work will concentrate on these aspects:

- Improving the quality and the accuracy of the generated mesh: The current normal estimation uses a tangent plane fitting for finding the surface normal. A more advanced approach is to include local curvature in the estimation algorithm. The curvature can also be used to control the radius of the neighbourhood at the vertex selection and triangulation stage.

- Extending the algorithm to accommodate multiple sensor inputs. The long term goal is to fusion data of different 3D sensors in a single mesh online. The next step to this is, to assign a quality feature to every point, according to the sensor's error model. The quality feature will be included in the processing stages to decide about insertion.

Publications

[1] T. Bodenmueller and G. Hirzinger, Online Surface Reconstruction From Unorganized 3D-Points For the DLR Hand-guided Scanner System, 2nd Symposium on 3D Data Processing, Visualization, Transmission, Thessaloniki, Greece, 2004

[2] S. Haidacher and G. Hirzinger, Estimating Finger Contact Location and Object Pose from Contact Measurements in 3-D Grasping. In Proceedings of the ICRA 2003, Taipei, Taiwan, 2003.