Light Field Cameras

A light field is a 4D dataset, which offers high potential to improve the perception of future robots. Due to the additional two dimensions of a light field, if compared to normal 2D images, it is possible to extract a variety of extra information from this data and to derive different data products. The two most common ones are 2D images focused on a specific distance and 3D depth images. We investigate the processing and usage of light field data for robotic systems considering the often limited resources and limited computation time. Nowadays, light fields are best recorded with a plenoptic camera, which is a new vision sensor, we investigate the calibration and also general nature of such cameras. This allows us to improve the quality and accuracy of the recorded data.

The Light Field

In contrast to traditional images, a light field not only contains the light intensity at a location where a light ray hits the camera sensor but also the direction from where the light ray arrives. The intensity, i.e. the amount of light measured by the sensor, at location (u,v) and the direction angles (θ, φ) is the information that forms the 4D light field . A normal 2D image, i.e. a dataset of the intensity at locations (u,v) on the sensor, can be generated with light field through rendering. The two additional information of a light field allow to extract a variety of different data products, the most common ones are 2D images focused at a certain distance or with an extended depth of field. Additionally, the generation of 3D depth images is also possible from 4D light fields.

We investigate the many possible usages of light fields for robotic applications, given that with a single recording it is possible to create multiple directly related data products, e.g. high resolution 3D depth images and 2D images. Due to the four dimensions of light fields, their processing currently requires relative high computational power. In order to make them usable for robots, where the available time for processing as well as the available resources are often limited, we seek for ways to increase the efficiency but also the quality of light field processing.

Plenoptic Cameras

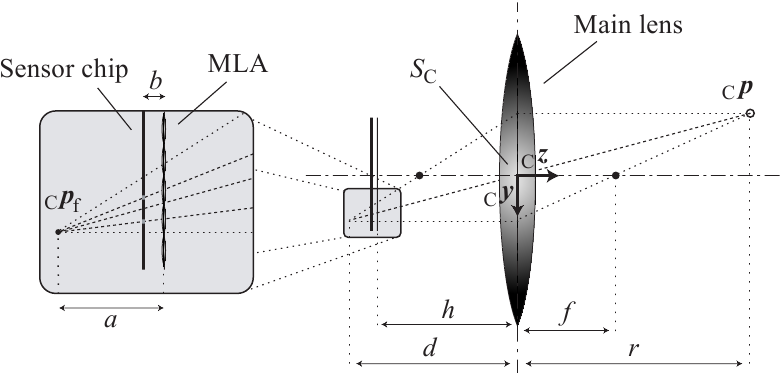

Light fields are best recorded with plenoptic cameras, which are slightly modified, off-the-shelf cameras. They have novel capabilities as they allow for truly passive, high-resolution range sensing through a single camera lens. In a plenoptic camera an additional micro lens array (MLA) is mounted in front of the camera sensor as shown in Fig. 1.

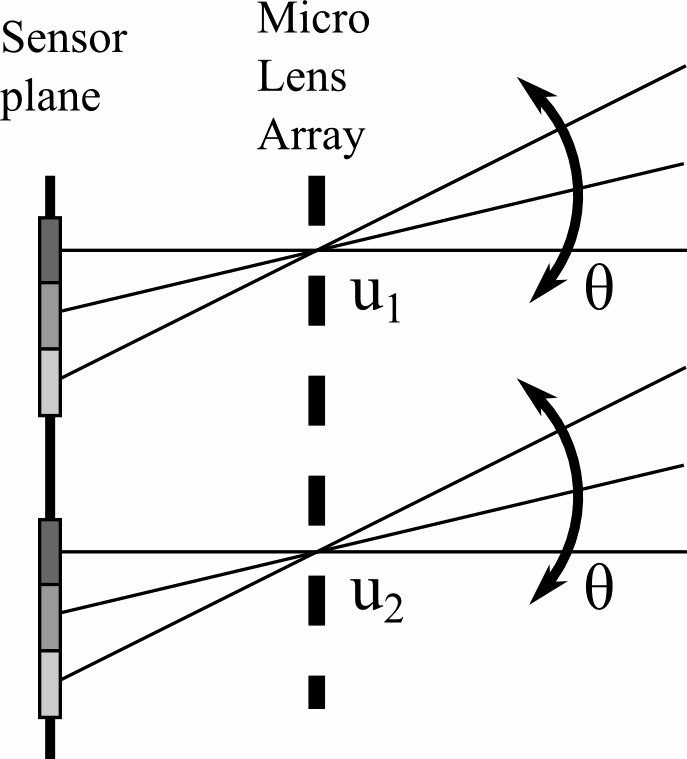

The MLA is a matrix of lenses, each with a diameter of tens of micrometers. The field of view of each micro lense enclose several hundreds of sensorpixels. A micro lens refracts the incoming light rays in a way, that depending on their direction, they fall on different pixels under that micro lens. By this, the four dimensions of a light field are recorded, i.e. the location (u, v) correlates with the micro lens and the directional angles (θ, φ) correlate with a specific pixel under that micro lens as shown in Fig. 2.

As in robot vision, cameras are considered to be measuring systems, one task is to get metrical information from recordings. Therefore, we investigate the calibration and also the general nature of plenoptic cameras, in order to improve the quality and accuracy of the recorded data.

Plenoptic Camera Calibration

Commercially available plenoptic cameras are presently delivering range data in non-metric units, which is a barrier to novel applications e.g. in the realm of robotics. We therefore devised a novel approach that leverages traditional methods for camera calibration in order to deskill the calibration procedure and to increase accuracy.

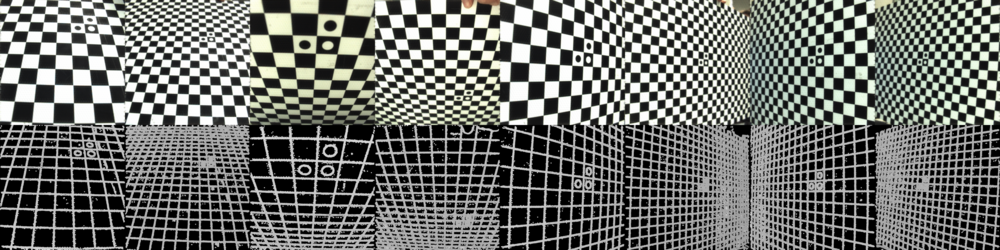

The calibration procedure starts out with a set of images of a known calibration plate, as in the standard method for pinhole cameras, see DLR CalDe and DLR CalLab.

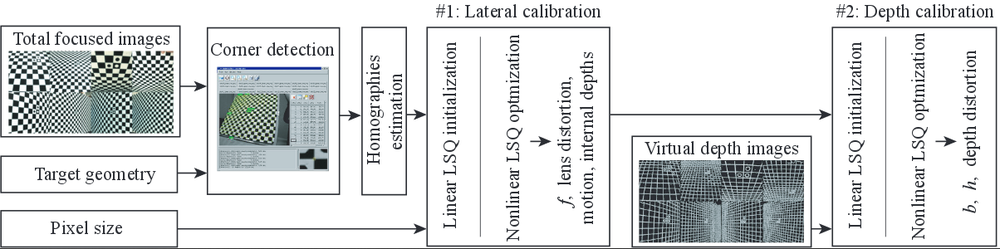

However, the calibration algorithm decouples the calibration of the traditional capabilities of plenoptic cameras from the calibration of their novel plenoptic features related with depth estimation as shown in Fig. 3.

Hence, these two steps of the calibration are performed with two different types of input data, both gained from the 4D light field of a single recording. Those are 2D images with an extended depth of field and 3D depth images.

In this way, the higher noise levels of the latter novel features will not affect the estimation of traditional parameters like the focal length and the radial lens distortion. Further advantages of the decoupled calibration are: First, different robustification methods can be applied to the input data in accordance to their specific propensity toward outliers. Second, both subtasks are simpler, enabling novel, rapid initialization schemes for all parameters where the only required physical data are the metric size of the sensor elements pixels and the local geometry of the calibration pattern. Third, neither the correlated lens and depth distortion models nor the inner lengths focal lenght f, distance b between the MLA and the sensor as well as distance h between the MLA and the main lense plane will get entangled during optimization (see Fig. 1 for the variable names).

The accuracy of the calibration results corroborates our belief that monocular plenoptic imaging is a disruptive technology that is capable of conquering new application areas as well as traditional imaging domains.

Single-shot metric depth estimation

Light field imaging provides a promising solution for estimating metric depth by using a unique lens configuration through a single device.However, its application to single-view dense metric depth is under-addressed mainly due to the technology's high cost, the lack of public benchmarks, and proprietary geometrical models and software.

We explored the potential of focused plenoptic cameras for dense metric depth, proposing a novel pipeline that predicts metric depth from a single plenoptic camera shot by first generating a sparse metric point cloud using machine learning, which is then used to scale and align a dense relative depth map regressed by a foundation depth model, resulting in dense metric depth.

To validate it, we curated the Light Field & Stereo Image Dataset (LFS) of real-world light field images with stereo depth labels, filling a current gap in existing resources.

Selected Publications

B. Lasheras-Hernandez, K. H. Strobl, S. Izquierdo, T. Bodenmüller, R. Triebel, and J. Civera. Single-Shot Metric Depth from Focused Plenoptic Cameras. Proceedings of the IEEE International Conference on Robotics and Automation (ICRA 2025), Atlanta, GA, USA, May 19-23 2025. [elib]

B. Lasheras-Hernandez, K. H. Strobl, and T. Bodenmüller. The Light Field & Stereo (LFS) Image Dataset. Zenodo, Nov 28 2024. https://doi.org/10.5281/zenodo.14224205 [elib]

M. Lingenauber, U. Krutz, F. A. Fröhlich, Ch. Nißler, and K. H. Strobl. In-Situ Close-Range Imaging with Plenoptic Cameras. Proceedings of the 2019 IEEE Aerospace Conference, Big Sky, MT, USA, March 2-9 2019, https://doi.org/10.1109/AERO.2019.8741956.

M. Lingenauber, U. Krutz, F. A. Fröhlich, Ch. Nißler, and K. H. Strobl. Plenoptic Cameras for In-Situ Micro Imaging (poster). Proceedings of the European Planetary Science Congress 2018, Berlin, Germany, September 16-21 2018.

U. Krutz, M. Lingenauber, K. H. Strobl, F. Fröhlich, and Maximilian Buder. Diffraction Model of a Plenoptic Camera for In-Situ Space Exploration (poster). Proceedings of the SPIE Photonics Europe 2018 Conference, Volume 10677, Straßburg, France, April 24-26 2018.

M. Lingenauber, K. H. Strobl, N. W. Oumer, and S. Kriegel. Benefits of Plenoptic Cameras for Robot Vision during Close Range On-Orbit Servicing Maneuvers. Proceedings of the 2017 IEEE Aerospace Conference, Big Sky, MT, USA, March 4-11 2017, pp. 1-18, https://doi.org/10.1109/AERO.2017.7943666.

K. H. Strobl and M. Lingenauber. Stepwise Calibration of Focused Plenoptic Cameras. Computer Vision and Image Understanding (CVIU), Volume 145, April 2016, pp. 140-147, ISSN 1077-3142, http://dx.doi.org/10.1016/j.cviu.2015.12.010.