Synchronisation and Calibration

The concept of multisensory data acquisition in real-time, as implemented by the DLR Multisensory 3D-Modeler, demands a time synchronisation concept as well as spatial calibration routines to display the 3D sensor data in the same world coordinate frame.

Hard-/Software Synchronisation Concept

The DLR laser-range scanner is the key component in this concept. Its interface is the CAN bus (CAN: Controller Area Network), a priority based fieldbus with realtime capabilities. Merging multiple sensors with multiple interfaces is a major problem in sensor synchronisation. This problem is solved by using the CAN bus as the master synchronisation bus for timestamps. Each sensor is supplied with the same video synchronisation pulse (generated e.g. by the video cameras), which results in synchronous measurements. The laser-range scanner generates its internal clock signal from this source. The scan head rotates with 25 turns/second, so that every 40 ms a new timestamp message is sent on the CAN bus. These messages are received by each sensor, which are thus triggered to deliver their data sets accordingly. Additionally to the laser-range scanner, the pose sensor sends its data directly via the CAN bus. Every sensor connected to the CAN bus is thus able to receive the pose for referring its local 2-D data sets to the global frame.

Each message on the CAN bus is processed according to its priority. High priority signals are command and synchronization messages, because their delivery is time critical. The synchronisation signals are of lower priority than commands, but higher than the laser-range scanner data. The pose data signal is of lowest priority: due to the timestamp a delay of this signal relative to the respective data and synchronisation message does not provoke illegal assignments, the merging can be done afterwards. The CAN bus baudrate is 1 MBit/s. The 2.5-D data sets of the laser-stripe sensor and the images of the texture sensor are merged with the pose data through timestamp identification. The data is available for applications through a unified RPC (Remote Procedure Call) via TCP/IP. This interface is the same as for the laser-range scanner data (the application layer has no access to the CAN bus, treated as an internal bus system).

All sensors should be able to measure synchronously, allowing the implementation of datafusion principles. For this reason, the sensors should not disturb or interfere with each other. Exemplarily, the laser-range scanner's laser line should not be visible in the texture sensor's images when using them simultaneously. A solution is an adjustment of the phase between laser-range scanner and texture sensor, resulting in taking camera shots while the laser is not visible. It is possible to automatically gain texture information while scanning the object's surface with the laser-range scanner. The laser-range scanner should not be interfered by the laser-stripe sensor. This is avoided by the laser-range scanner's surrounding light compensation feature. The infrared flashes of the tracking system cause noise in the measurements of the laser-range scanner. Knowing the time relation between video pulse and flash appearance allows us to exclude values measured during the flash period.

Spatial Sensor Calibration

The transformation of the data xsensor in the sensor coordinate system CoSsensor to the position sensor tool center point (tcp) coordinate system CoStcp is described by a rotation matrix Rsensor and a succeeding translation Tsensor. CoSbase describes the global coordinate frame of the pose sensor (see Fig 2). The pose sensor measures a 6-dimensional vector containing three translational parameters and three rotational angles. The transformation CoSsensor to CoStcp is different for each sensor type. They depend on the way each sensor is attached in the hand-guided device. Generally, the parameters of the matrix Rsensor and Tsensor are initially unknown. The sensor calibration deals with the identification of these 6 parameters.

Camera Calibration and Distortion Compensation

Camera calibration is the process of determining all intrinsic and extrinsic parameters for describing the projection behavior of a camera by a mathematical model. Many cameras can be modeled as pinhole cameras with two coefficients of radial lens distortion. This results in 7 intrinsic parameters. Additionally, the transformation between the camera and a world coordinate system is modeled using 6 extrinsic parameters (i.e. translation and rotation).

Distortion Compensation

The distortion of the images by the camera lenses have to be compensated to ensure a correct 3d-reconstruction. We use a 3rd order polynomial distortion model as well as a radial distortion model for this purpose. Slightly better results have been obtained with the polynomial distortion with higher efforts on the calibration process.

Stereo algorithms rely not only on known internal parameters (e.g. distortion) but also on known external calibration parameters (geometry between the stereo cameras) in order to find stereo correspondences easily along (epipolar) lines and precisely reconstruct the geometry of the measured object.

The figures above show the distorted original camera images together with the rectified stereo images.

Single Camera Calibration

Calibration is done by capturing images of an object, whose dimensions are exactly known (i.e. calibration object). A flat calibration grid permits an easy and accurate creation using an ordinary printer.

Single camera calibration involves the following steps:

Capturing several images of the calibration grid from different viewpoints.

- Automatic recognition of all calibration points (i.e. corners of squares) in the image.

- Calculating all parameters from known coordinates of all calibration points in a world coordinate system and the corresponding projections of these points in several images from different viewpoints. The calculation is done using the Zhang and Sturm and Maybank algorithm.

This results in 7 intrinsic and 6 extrinsic parameters for each viewpoint. Stereo cameras are calibrated by capturing images of the calibration grid with both cameras at the same time. For each viewpoint, the extrinsic parameters describe the transformation to a common world coordinate system. The fact that the relative transformation between both cameras is constant is not enforced during single camera calibration. Therefore, for each viewpoint, the relative transformation differs slightly. This is treated in the stereo camera calibration step.

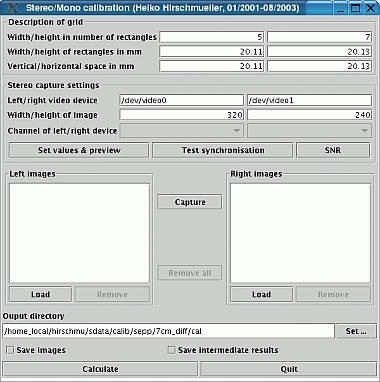

Stereo Camera Calibration

Stereo camera calibration is performed by

- Using the results of single camera calibration and calculating an initial estimate of the relative transformation between both cameras from the first viewpoint.

- Non-linear optimization over all parameters by keeping the relative transformation between the cameras constant and minimizing the reprojection error of all calibration points.

This results in 20 parameters for the stereo camera system, i.e. 7 intrinsic parameters for each of both cameras and 6 parameters for the relative transformation between both cameras. Additionally, for each viewpoint, there are 6 parameters, which describe the transformation between the world and the stereo camera.

TCP Calibration

These transformations between the world coordinate system and the stereo camera for each viewpoint are usually not required. Instead, the constant transformation between the cameras and the Tool Center Point (TCP) is often required in robotics applications.

The TCP calibration is performed by:

- Recording the transformation between a base point and the TCP for each calibration viewpoint, using the sensors of the robot on which the stereo camera is mounted.

- Calculating an initial estimate of the constant transformations between the world coordinate system and the base point as well as the constant transformation between the TCP and the stereo cameras from several corresponding base to TCP and world to stereo camera transformations, using e.g. Singular Value Decomposition.

- Non-linear optimization of both constant transformations by minimizing the reprojection error of all calibration points.

This results in 26 parameters, which describe the stereo camera system and the transformation between the stereo camera and the TCP.

Implementation

All steps are automatically performed in a user friendly Java/C implementation.

Future work includes improving the handling of the tool as well as increasing the robustness of automatic calibration grid recognition.

DLR Laser-range Scanner Calibration

For the calibration of the laser-range scanner on the tcp, we only need to determine the parameters describing the transformation from CoSsensor to CoStcp, because neither distortion nor scaling parameters need estimation. Our attempt is to measure data points on the surface of a sphere with given radius at arbitrary centre, with the laser-range scanner synchronously with the tcp pose delivered by the pose sensor. For this estimation step we use the Levenberg-Marquardt method, a widespread optimization method for nonlinear least square problems. It is a smooth combination between the Inverse-Hessian Method and the steepest descent method. A merit function has to be formulated. Its minimization delivers best-fit parameters for our problem of calculating the constant transformation between the sensor and the tcp.

The Levenberg-Marquardt algorithm needs a quality parameter, e.g. deviation, for every single measurement. As the algorithm is very unstable against outliers and outliers are more common at greater ranges, we take the increasing variance at longer sensing ranges, approximated by an exponential function as a measure of quality, such that measurements from further away will be considered to a lesser extent.

References

T. Bodenmüller, W. Sepp, M. Suppa, and G. Hirzinger. Tackling Multi-sensory 3D Data Acquisition and Fusion. Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS 2007), pp. 2180-2185, San Diego, CA, USA, 2007.

M. Suppa and G. Hirzinger. A Novel System Approach to Multisensory Data Acquisition. Intelligent Autonomous Systems 8 (IAS-8), pp. 996-1004, Amsterdam, March 10-13, 2004.