Research for reliable and secure artificial intelligence

- DLR institute with two locations, each with specific research topics in Ulm and Sankt Augustin.

- Secure and trustworthy AI-based applications and data rooms for industry.

- Artificial intelligence is a key technology in the context of digitalisation.

- Focus: Digitalisation, Security, Artificial Intelligence, Industry 4.0

Artificial intelligence (AI) is used, for instance, in transport systems, in aerospace and in energy supply. It has been part of everyday life in modern society for a long time - and as digitalisation advances, AI becomes all the more important: it must be both secure and transparent. In an effort to strengthen research and development in this area, the German Aerospace Center (Deutsches Zentrum für Luft- und Raumfahrt; DLR) has established the Institute for AI Safety and Security. The DLR institute has now been officially opened with its two locations in Ulm and Sankt Augustin.

"Artificial intelligence is one of the most important future technologies for digitalisation. DLR is itself a pioneer in the use of the latest AI processes and methods in its core areas of aeronautics, space, energy, transport and security," says Anke Kaysser-Pyzalla, Chair of the DLR Executive Board. "The subject is of great importance for research and its use in industry and society. For this reason, one of the focal points of the DLR Institute for AI Safety and Security is ensuring that AI-based solutions are operationally secure and safe from attack." Safety-critical AI research and applications also include the organisation, processing, storage and exchange of sensitive data.

"The performance and stability of our national economy are becoming increasingly dependent on AI-driven infrastructures that must function completely reliably at all times," emphasises Anna Christmann MdB, the Federal Government's Aerospace Coordinator and Commissioner for Start-ups and the Digital Economy at the Federal Ministry for Economic Affairs and Climate Action (BMWK)." To achieve this, there has to be a good interplay between excellent research, high-performance scientific infrastructure, innovative start-ups and well-established companies. With its recognised expertise, DLR is in an excellent position to help meet the challenges ahead, together with partners from industry and science."

Video: DLR Institute for AI Safety and Security - Research for reliable and secure artificial intelligence

Your consent to the storage of data ('cookies') is required for the playback of this video on Youtube.com. You can view and change your current data storage settings at any time under privacy.

Human-AI interaction

At the Ulm site in Baden-Württemberg, the focus is AI safety. Here, error-free operation and operational safety are of particular interest. The AI Engineering research field develops evaluation and test methods with an engineering focus. One example of this is AI-based environment sensing, which forms a basis for automated driving, among other things. It also involves the cooperation between humans and AI: How can humans work together with continuously learning and largely automated systems? How do we successfully train AI? The researchers are developing reliable software and hardware environments for the efficient implementation of AI components. In addition to other innovative computing methods, researchers in Ulm are investigating the use of quantum computers as the basis for quantum machine learning. Up to 75 employees will work at the site.

"The DLR Institute for AI Safety and Security is an essential pillar in our state’s strategy to bring the applications of artificial intelligence and quantum computing from research to industrial application. By researching and developing secure AI technologies and systems, the Institute supports the state’s mobility and capital goods sectors. The DLR campus in Ulm is an ideal innovation space for the Institute's researchers," says Nicole Hoffmeister-Kraut, Minister of Economic Affairs, Labour and Tourism of the State of Baden-Württemberg. The DLR Institute for AI Safety and Security is located in the immediate vicinity of the DLR Institutes of Engineering Thermodynamics and of Quantum Technologies.

Processing sensitive data securely

The focus in Sankt Augustin (North Rhine-Westphalia) is on AI security - protection against external attacks. How can we be sure that AI algorithms are working properly? In what way can the AI's decisions be traced? The focus of the research is on methods that offer both protection and authorised use of sensitive data. This includes making data available only to certain users and for certain types of use, while at the same time ensuring that information worthy of protection, such as personal data, remains protected. In future, 45 DLR employees will work at the site in Sankt Augustin.

Mona Neubaur, Minister for Economic Affairs, Industry, Climate Action and Energy of the State of North Rhine-Westphalia, emphasises the value of the Institute for social development: "We are living in a time of compounding crises and at the same time have to shape two societal transformations, one towards climate neutrality and sustainability and another towards a digital society. Artificial intelligence, in particular, as such a versatile tool, will be able to instigate the relevant changes in administration, production and logistics processes that will contribute to innovation while saving energy and other resources Ensuring that this is also done safely and reliably is a major concern for us. With this in mind, DLR is opening the right institute at the right time in the most suitable locations

Solutions for the mobility of the future

The DLR Institute for AI Safety and Security is focusing strongly on industrial application and networked data rooms - for example in the field of mobility. For this reason, the Institute is coordinating the GAIA-X 4 Future Mobility project family, which brings together more than 80 participants from research and industry. The focus here is on the development of digital services and products that focus on the secure exchange of data between users, service providers, manufacturers and suppliers. GAIA-X is a European initiative that develops the legal and structural foundations for a decentralised digital ecosystem.

In addition, the DLR Institute for AI Safety and Security is actively involved in the Catena-X research project. Here, stakeholders from the automotive industry are establishing a unified global data exchange and services based on European standards. The emerging ecosystem and the associated services are an important application environment for AI.

Attack and operational security are key

At the opening event, the participants demonstrated what data sovereignty means in practice and how to work with GAIA-X: a vehicle collects data during operation, which is securely evaluated using specialised algorithms and made available again online via GAIA-X. Thanks to the compute-to-data approach, sensitive data does not leave the vehicle and the results can be retrieved in the vehicle.

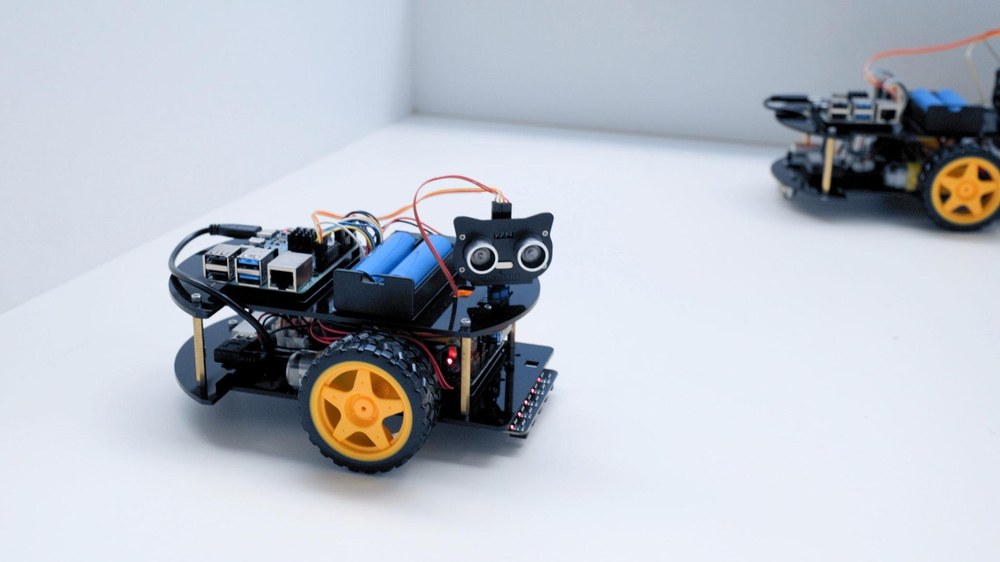

Another demonstration presented the interaction between humans and machines. A robot autonomously avoided obstacles in front of the stage and reacted spontaneously to occurrences. The Institute researches the principles of such human-machine interaction, always focusing on complex and continuously learning safety-critical systems.

"Safety and security by design is a defining concept when developing algorithms. This means making attack and operational security an essential component from the very beginning and throughout the entire process," explains Frank Köster, founding director of the DLR Institute for AI Safety and Security. "This is indispensable for us, as at DLR we primarily conduct research for ambitious AI-based applications."

Institute brings together AI research at DLR

The DLR Institute for AI Safety and Security was established almost two years ago at the Ulm and Sankt Augustin sites. It combines DLR's existing activities in the field of AI. In addition to technological issues, ethical, legal and social aspects are also important fields of research.

Here, the scientists can access unique data sets from the wider DLR research infrastructure. The high quality of the data from Earth observation or from the Test Bed Lower Saxony, for example, forms a basis for AI-oriented research and development work.