Optical Navigation for Exploration Missions

Motivation

Future space exploration missions envisage feats ranging from in-orbit rendezvous and docking to precise and safe landing on planetary bodies. These missions will not only take place in the vicinity of Earth and Moon but also stretch out several astronomical units to targets like Mars, asteroids, or the icy moons of Jupiter and Saturn. A reliable execution of such maneuvers can only be achieved by autonomous spacecraft using the target body as a navigation reference. Optical navigation methods are seen as a promising tool for realizing this approach. Not surprisingly highly detailed information about the mission target is required. One major class of data providing such information encompasses the results of modern mapping missions, which provide high-quality, high-resolution, global geo-referenced maps, necessary for e.g. precise navigation. A second class comprises data acquired during the mission, for that an obvious application is gathering the live-information necessary to refer to on-board maps for determining the spacecraft’s current position. Another frequent application is building a high-resolution map of the landing site based on real-time 3D measurements of the underlying surface, taken during the final approach.

In any case, this introduces the need for significantly improved online processing capabilities as well as for novel algorithms and optical sensors. Answering this challenge, the DLR Institute of Space Systems is working on several topics in the field of optical navigation.

Overview of our Activities in the Area of Optical Navigation

Our activities mainly comprise:

- Development of optical navigation sensors

- Integration of optical navigation sensors into GNC systems

- Development and maintenance of the Hardware-in-the-Loop testing facility for optical navigation algorithms and systems

Development of Optical Navigation Sensors

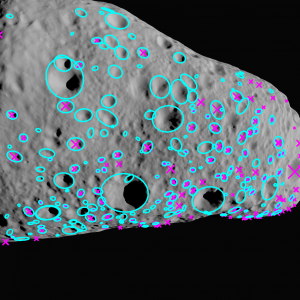

Crater Navigation

DLR is developing a sensor which is capable of determining its own position and attitude with respect to any previously mapped cratered celestial body, through the acquisition and processing of images it takes of its surface. Integrated into a GNC system, such a sensor enables autonomous and precise landing, providing absolute measurements that can, for instance, support a state estimator. The sensor works by:

- Image acquisition: taking an image of the target body’s surface

- Crater detection: detecting craters within the image

- Crater identification: identifying unique crater constellations and thereby the craters themselves within the constellations

- Pose estimation: calculating its own position and attitude with respect to the target based on the knowledge of the crater positions and the camera calibration parameters

The crater navigation sensor works over a wide range of sun elevations and camera perspectives with respect to the observed surface. As long as the working conditions are fulfilled, a single image is in principle sufficient for estimating spacecraft position and attitude.

The sensor hardware integrates a camera and electronics unit responsible for processing of the camera images, on-board storage of and access to the crater catalog. It allows the determination of its own position and attitude autonomously and in real-time.

Currently the sensor has achieved a maturity of TRL 4, via successfully demonstrating the algorithm with the camera demonstrator within a representative environment, provided by the TRON lab. Furthermore the crater navigation performance is well suited for integration with typical navigation filter architectures of advanced GNC systems. This has been fully demonstrated in the helicopter flight tests within the DLR project ATON. There, the inertial data of an IMU was successfully fused with the crater navigation fixes by a Kalman filter algorithm. The result was used for the real-time guidance and control of the vehicle.

Star Tracker

Given the great distance between the Sun and the closest stars, there is practically no parallax among the starry background for an observer traveling within the Solar system. In other words, the part of the starry sky a camera sees is only dependent on its viewing direction.

This phenomenon is extensively employed for space attitude navigation. A star sensor (or tracker) determines its own orientation with respect to the “constant” starry background by using a star catalog to identify the stars in the images it takes. Further processing steps allow determining the star tracker’s orientation with respect to Earth.

In the frame of the SHEFEX II project, a star tracker was built by the GNC systems department for application on sounding rockets.

Tag Navigator

The Tag Navigator is a navigation sensor that provides measurements of position and attitude relative to a panel carrying specific markers. Currently, the sensor is used in the EAGLE project, for supporting the navigation system in local flights of the hovering platform.

The sensor platform consists of two components: a panel of artificial markers (design based on AprilTags) and camera and a computing unit. Given accurate 3D positions of markers and intrinsic calibration parameters of the camera, the pose of the camera relative to the panel can be estimated by formulating the estimation as a Perspective-n-Point problem.

Integration of Optical Navigation Sensors in GNC Systems

Usually a single type of sensor identifies only a part of the full state of a spacecraft, e.g. its position or its rotation relative to an external reference system. By combining the data of a selection of suitable complementary sensors the full state can be determined. In the area of optical navigation we focus on the fusion of the data coming from optical sensors with information given by inertial sensors.

Current work

Within the ATON project, the GNC systems department collaborates with other DLR institutes in the development a navigation system that integrates inertial sensors with novel optical navigation technologies. The goal is to develop a navigation system enabling the autonomous, precise and safe landing on the Moon. One of the major contributors to the navigation solution shall be the crater navigation technology; it delivers absolute pose updates to the navigation system. Its measurements are fused with data from other sensors, an inertial measurement unit (IMU), a star tracker, a laser altimeter, feature tracking and a flash lidar. More information

EAGLE is a project of the DLR RY-GNC department, for demonstrating landing technologies. The tag navigator mentioned above is utilized in this project for providing absolute pose updates to the navigation system. It has been used during several tethered flight tests of the hovering platform. More information

Concluded activities

Operating TRON for Hardware-in-the-Loop testing optical navigation sensors and corresponding GNC systems

Due to their nature, spacecraft systems are normally not accessible for maintenance after launch. Consequently, their reliability must be very high. Continuous testing is a major contribution for achieving this. To this end, the Testbed of Robotic Optical Navigation (TRON). TRON provides an environment which allows qualifying breadboards to TRL 4, and qualifying flight models to TRL 5-6. TRON supports the testing of both active and passive optical sensors such like lidars and cameras.