Big Data from Space – seeking solutions for the flood of data from space

- The Big Data from Space conference took place in Munich from 19 to 21 February 2019.

- New ideas and concepts, including those involving artificial intelligence, are needed in order to be able to process data and turn it into information.

- The systematic analysis of archive data by self-learning AI programs is becoming increasingly important in scientific research.

- Focus: Big Data, Artificial Intelligence, digitalisation, space

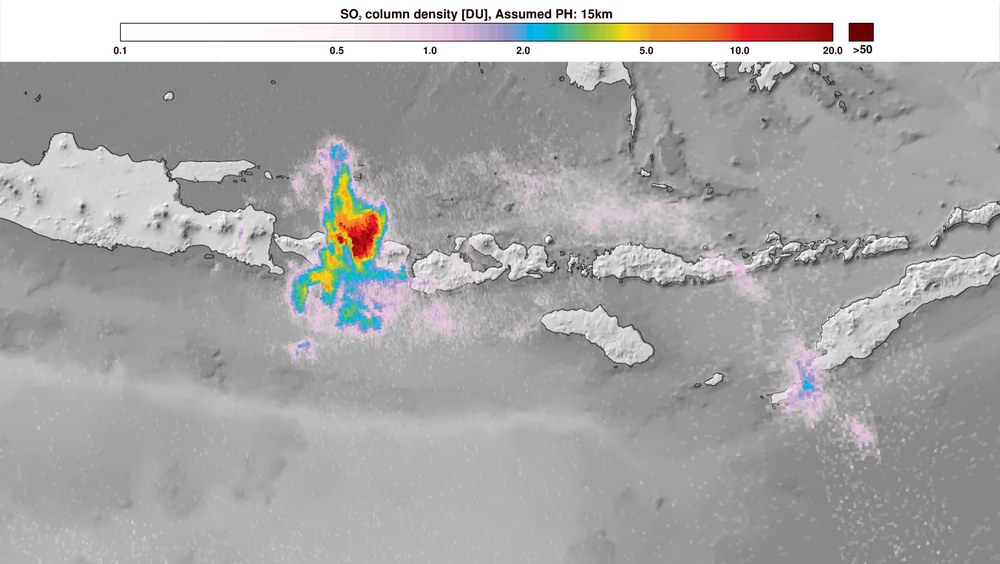

Earth observation satellites provide important data that allows the rapid detection of changes to the environment and climate, for example, or measurements of the movement or shrinking of glaciers. Up-to-date maps can be provided to the emergency services in the event of disasters such as flooding or earthquakes. This, however, requires the accumulation of very large quantities of data. The European Union (EU) Copernicus programme satellites are among the biggest producers of data in the world. Their high-resolution instruments currently generate approximately 20 terabytes of data every day. This is equivalent to an HD film that would run for about one-and-a-half years. In addition to this, data is also provided by German missions such as TerraSAR-X and TanDEM-X, as well as an increasing number of other sources, such as the internet and measurement stations. The processing and analysis of these very large and heterogeneous data sets are among the Big Data challenges facing an increasingly digital society. In order to find solutions, around 650 experts gathered at the Big Data from Space conference, which ran from 19 to 21 February 2019 in Munich and was hosted by the German Aerospace Center (Deutsches Zentrum für Luft- und Raumfahrt; DLR), the European Space Agency (ESA), the EU Satellite Centre and the Joint Research Centre. The conference was also supported by European Space Imaging, OHB, GAF and Quantum.

“We are receiving very large quantities of data due to the increasing number of satellites, as well as the use of new, higher-resolution sensors, so evaluating these data is proving an ever-greater technological challenge,” says Hansjörg Dittus, DLR Executive Board Member for Space Research and Technology. “For this reason, DLR is increasingly investigating efficient methods and processes such as machine learning. This will enable the provision of informative analysis and recommendations for action on urbanisation, atmospheric change and global warming. DLR is also planning to create the information technology infrastructure required for this purpose – a high-performance data analytics platform.”

“Efficient access, for example via online platforms, is crucial for the analysis of these large quantities of data. The DLR Space Administration is therefore promoting the development of these kinds of options for access and the corresponding processing models. In addition to innovative scientific applications, this opens the way for the development of new business models in the growing downstream sector within Earth observation,” explains Walther Pelzer, DLR Executive Board Member responsible for the Space Administration.

At a reception in the Munich Residenz, Bavaria’s State Minister of Economic Affairs, Regional Development and Energy, Hubert Aiwanger, stressed that: “It is remarkable how much space technology defines our everyday lives – navigation, head-up displays and the entire field of assistance robotics are just three examples among many. Today, climate change and environmental protection are at the very top of the political agenda, and not only in Bavaria. Both are global issues, and both require state-of-the-art technology. Scientific study of the climate system, the atmosphere, tropical rainforests, the oceans and the polar regions are all fields in which space can provide vital data that enables a better understanding of ecosystems and affords a better chance of protecting them. Bavaria has a strong aerospace sector, with a total of 65,000 engineers, technicians and skilled workers. They generate an annual revenue of 10 billion euro.”

Artificial intelligence guiding data selection

New ideas and concepts are needed in order to be able to process data and turn it into information. Artificial intelligence plays a major role in this, as such processes are extremely powerful, especially where large amounts of data are involved. DLR scientist Xiaoxiang Zhu, based at the Technical University of Munich, is conducting research into the use of such methods. Together with her team, Zhu is developing exploratory algorithms from signal processing and artificial intelligence (AI), particularly machine learning, in order to significantly improve the acquisition of global geoinformation from satellite data and achieve breakthroughs in geosciences and environmental sciences. Novel data science algorithms allow scientists to go one step further with the merging of petabytes of data from complementary georelevant sources, ranging from Earth observation satellites to social media networks. Their findings have the potential to address previously insoluble challenges, such as recording and mapping global urbanisation – one of the most important megatrends in global change.

Yet the field of satellite remote sensing is not alone in grappling with this challenge. Investigating phenomena the other way round – looking from Earth into space – also generates enormous amounts of data. Telescopes such as the Square Kilometre Array (SKA) in South Africa and Australia provide large quantities of data, as do ESA’s space-based telescopes, for example Gaia and Euclid. The systematic analysis of archive data by self-learning AI programs is thus becoming increasingly important in astronomical research.

Intelligent search portals provide the desired information

Earth observation satellites and telescopes show that methods drawn from data science and artificial intelligence are indispensable. Suitable storage and intelligent access options are required in order to be able to handle and evaluate these large quantities of data. Access is the key factor for interested users. Today this mostly takes the form of an online portal such as CODE-DE (Copernicus Data and Exploitation Platform, Germany), which is run by the DLR Space Administration. This allows users to access all of the data and process them there directly. Scientists can thus utilise a convenient online working environment for studying satellite data, provided free of charge under the Copernicus programme. This saves local storage space and computing capacity for users.

The data portals themselves are also the subject of research. What makes the ideal platform, and which search options should be made available to the user? Following the example of internet search machines, images of distant planets or Earth’s surface are easy to find by entering information such as “Which celestial bodies are considered likely to have water?” or “Which areas of Germany are particularly affected by drought in summer?” Modern portals should provide the correct datasets in response to such queries. The long-term availability of this global information is also of great importance. Similar to a library, it must be ensured that satellite data are preserved and can be found and retrieved over a long period of time. This is the purpose of facilities such as the German Satellite Data Archive (D-SDA) at the DLR Earth Observation Center in Oberpfaffenhofen.

Time series – simultaneously a curse and a blessing

Time series are one major benefit of modern Earth observation. Comparing global glacier dynamics, to give one example, provides valuable information about climate change, the change in sea level and regional water balance. Yet extensive time series are accompanied by very large quantities of data that are extremely difficult to transfer or process. DLR products such as the World Settlement Footprint (WFS Evolution), a high-resolution global mapping of urban growth over the last 30 years, can only be created using powerful datacentres and automated evaluation processes. The fact that this was unthinkable just a few years ago shows the potential of combining large amounts of data with automated processes. Scientists are also working on a tool that automatically compares information from time series, thus bypassing any bottlenecks. This saves time and storage capacity during analysis. However, satellite information will not just be made available to Earth observation experts in future; lay persons will also be able to make use of such products. At present, this remains highly problematic, as the data made available so far is largely unprocessed. IT experts are now looking to change this and provide data and services that are ready for analysis.